AI Ethics and Responsible Deployment: A Roadmap for Irish, NI, and UK Businesses

Table of Contents

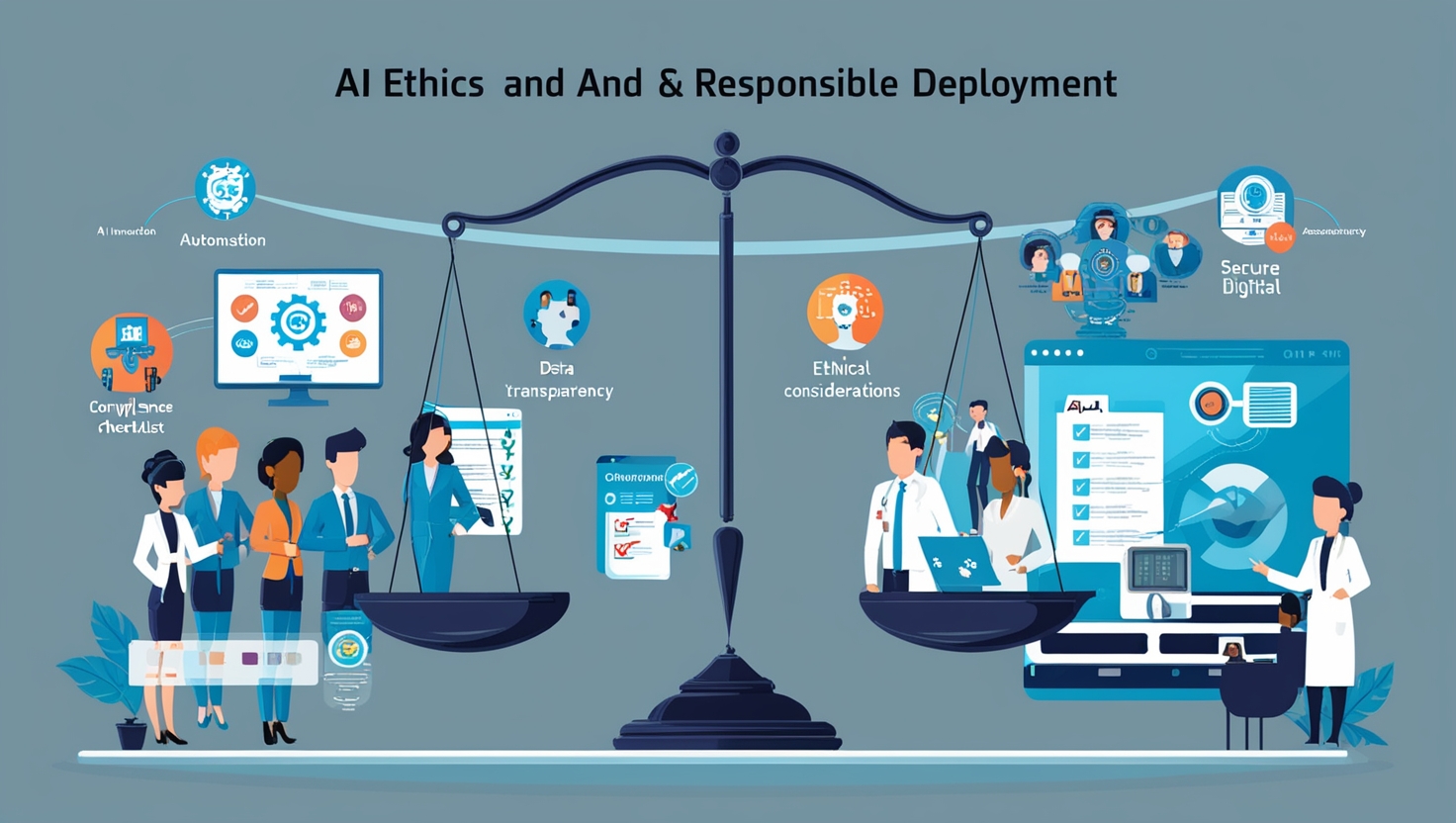

As artificial intelligence (AI) becomes integral to everyday business across Ireland, Northern Ireland, and the UK, concerns around ethical usage, responsible deployment, and fairness grow. Beyond regulatory compliance, adopting ethical AI can build user trust, reduce reputational risks, and even serve as a competitive advantage. This article delves into why AI ethics and and responsible deployment matter, how data biases emerge, and practical steps for SMEs and larger enterprises to ensure responsible AI usage.

“In a rapidly digitising economy, ethical AI isn’t a box to tick—it’s about building trust with customers who want reassurance that technology won’t disadvantage or exploit them,” says Ciaran Connolly, Director of ProfileTree.

Why AI Ethics and Responsible Deployment Matters for UK and Irish Businesses

As AI adoption accelerates, UK and Irish businesses must prioritise ethical considerations and responsible deployment to maintain trust, comply with regulations, and mitigate risks. Bias in AI models, data privacy concerns, and transparency in decision-making are key challenges that can impact brand reputation and legal compliance. With evolving frameworks like the EU AI Act and GDPR, businesses must integrate fairness, accountability, and human oversight into their AI strategies to ensure ethical and sustainable use.

Building Trust and Brand Reputation

Companies adopting AI-driven chatbots or decision systems in Dublin, Belfast, or Manchester must show they handle user data responsibly. If an AI incorrectly denies a bank loan or discriminates in recruitment, negative PR can spread rapidly. Ethical AI fosters a transparent, user-centric approach, maintaining a positive reputation in local communities.

Regulatory Landscape

While GDPR ensures data privacy, upcoming EU or UK laws (like the potential AI Act) emphasise fairness and accountability. Irish and Northern Irish businesses may need to meet both EU and UK standards—ethical frameworks ease cross-border compliance. Overlooking these could lead to hefty fines or legal disputes.

Moral Imperative

Beyond profit, responsible AI stands on moral grounds: ensuring no group is unfairly excluded or harmed. For instance, an AI job-screening tool that penalises certain accents or backgrounds in the UK can perpetuate bias. Ethical checks safeguard inclusivity, reflecting a brand’s core values.

Identifying Bias in AI

Bias in AI can lead to unfair outcomes, affecting hiring, customer service, and decision-making processes. UK and Irish businesses must assess training data, monitor algorithmic outputs, and implement fairness audits to detect and mitigate biases. Regular testing, diverse data sets, and human oversight help ensure AI systems operate equitably. Addressing bias not only improves accuracy but also enhances trust and compliance with evolving regulatory standards.

Data Bias Origins

AI learns patterns from historical data. If training datasets reflect societal biases (e.g., predominantly men in leadership roles), the AI may replicate them in decisions. SMEs must examine if their local data from Ireland or the UK skews heavily towards certain demographics or job roles, inadvertently penalising minority applicants.

Case: Recruitment Tools

Imagine an AI CV parser that’s trained mostly on successful male applicants from large UK cities. It might unintentionally rank female or rural-based applicants lower. Periodic audits of model outputs can reveal such patterns, prompting dataset rebalancing or additional oversight.

Language and Regional Accents

Voice-based AI or chatbots deployed in Northern Ireland might incorrectly interpret certain dialects if not tested on local speech samples. This can degrade the user experience or systematically disadvantage certain regions. Ethical deployment includes collecting diverse voice data from Cork, Derry, or Cardiff.

“Bias often creeps in unconsciously—review your training sets for representation of all user segments, from rural Gaelic speakers to underrepresented backgrounds,” suggests Ciaran Connolly.

Key Pillars of Ethical AI

Ethical AI is built on key pillars that ensure fairness, transparency, accountability, and privacy. For UK and Irish businesses, this means using unbiased data, making AI decisions explainable, and maintaining human oversight. Compliance with regulations like GDPR and the EU AI Act is crucial to protect user rights. Prioritising these principles helps businesses foster trust, reduce risks, and ensure AI systems serve society responsibly.

Transparency

Users must understand if and how AI influences decisions—like a bank’s loan approval or a marketing chatbot’s product recommendations. Provide disclaimers: “This recommendation is generated by an AI system,” and offer contact details if the user wants a human review.

Accountability

Firms remain accountable for AI actions. If an ML algorithm denies a service or post, a human-in-the-loop mechanism ensures recourse. In job hiring, an appeal or manual review step can mitigate unfair rejections.

Privacy & Consent

GDPR compliance in Ireland/UK demands robust data minimisation and user consent. Ethical AI extends that to responsible data usage—only gather data needed for the AI’s function, anonymising sensitive info, and maintaining clear user communication.

Fairness and Inclusivity

Models must treat diverse genders, ages, ethnicities, or local dialects fairly. Testing on local Gaelic text or Northern Irish accent data can ensure more inclusive performance.

Practical Steps for Responsible AI

Implementing responsible AI requires a structured approach. Businesses in the UK and Ireland should start with clear AI governance policies, ensuring alignment with ethical standards and regulatory frameworks like GDPR and the EU AI Act. Regular audits and bias testing help maintain fairness, while transparent AI models improve accountability. Providing human oversight in decision-making and educating teams on AI ethics further strengthens responsible deployment. By embedding these practices, companies can innovate with AI while maintaining trust and compliance.

Data Diversity

SMEs developing local AI solutions—like a Belfast-based chatbot—should gather balanced datasets from varied user demographics (urban vs. rural, different age groups, etc.). This helps the model learn broad patterns, reducing skew or exclusion.

Bias Testing

Regularly run scenario tests: e.g., “Does our AI handle queries from Gaelic speakers well?” or “Does it produce different outcomes for men vs. women?” Document results. Adjust training data or weighting to address discovered biases.

Explainable AI Tools

Use or build explainable AI frameworks that produce interpretable results. E.g., if a user is denied a service, the system can highlight top factors. If reasons appear discriminatory, the brand can rectify them quickly.

Ethics Committee or Advisor

Larger SMEs or local councils might convene an ethics panel—mixing data scientists, legal experts, community reps—to oversee AI deployments. Smaller businesses can consult external specialists or local university labs for ethical AI guidance.

Regulatory Approaches and Local Examples

Regulations around AI are evolving rapidly in the UK and Ireland, with frameworks emphasizing transparency, fairness, and accountability. The EU AI Act sets strict guidelines for high-risk AI applications, while the UK is taking a more flexible, sector-based approach. Local examples include Ireland’s AI Strategy, which promotes ethical AI development, and the UK’s AI Regulation White Paper, focusing on innovation-friendly oversight. Businesses must stay informed and adapt their AI practices to remain compliant and competitive.

EU AI Act and UK Code

While the EU progresses on the AI Act emphasising risk-based categories, the UK might adopt a parallel or slightly different approach. Irish companies (part of the EU) and Northern Irish businesses (often under UK law) must watch both frameworks. Ethical alignment ensures future-proof compliance.

Local Partnerships

In Ireland, some tech hubs or universities (Trinity College, University of Galway) offer AI ethics research or consultation. Similarly, in the UK, organisations like The Alan Turing Institute champion responsible AI usage. Collaboration with these bodies can raise your brand’s E-E-A-T (Expertise, Authoritativeness, Trustworthiness).

Competitive Advantages of Ethical AI

Ethical AI isn’t just about compliance—it’s a competitive advantage. Businesses that prioritise fairness, transparency, and accountability build stronger customer trust and brand reputation. Ethical AI also reduces legal and reputational risks, ensuring long-term sustainability. Companies that embrace responsible AI practices often see improved user engagement, higher retention rates, and a stronger position in emerging markets where regulations are tightening. By leading with ethics, businesses can differentiate themselves while driving innovation and growth.

Enhanced Customer Loyalty

Consumers prefer brands that respect privacy and fairness. A chatbot disclaiming how it uses user data fosters trust. Similarly, an e-commerce site clarifying it uses AI for product recommendations while respecting personal details shows a user-first approach. Over time, word-of-mouth grows.

Positive Brand Image

Media coverage often spotlights AI controversies. Avoid that by proactively championing ethical guidelines. Publicly stating an “AI Code of Conduct” can earn coverage from local press, especially in Northern Ireland or the UK, where trust issues and local community ties run deep.

“Choosing ethical AI is both the right thing and a brand booster—stakeholders see you care about real people, not just tech hype,” remarks Ciaran Connolly.

E-E-A-T in AI Context

In AI-driven content, E-E-A-T (Experience, Expertise, Authoritativeness, and Trustworthiness) remains key for ranking and credibility. AI must be guided by human expertise, cite credible sources, and maintain transparency to ensure trust. Balancing automation with human oversight is essential for quality and search visibility.

Showcasing Expertise

If your brand uses AI for key decisions, highlight the qualifications of the data scientists or AI specialists behind it. For E-E-A-T, Google wants to see real credentials—like your ML developer’s background or your partnership with local AI research bodies.

Building Trustworthy Content

Write blog posts or whitepapers explaining your AI approach, usage disclaimers, or bias mitigation. Detailed, honest content about your AI processes can rank better and reassure users—E-E-A-T synergy.

Verifying Real-World Experience

If you pilot an AI-driven recommendation engine, detail real use cases or user feedback (with permission). Show you have first-hand experience implementing the solution. This matches the “Experience” dimension newly emphasised by Google.

Future Outlook: Ethical AI in 2025 and Beyond

As AI adoption accelerates, ethical considerations will shape regulations, consumer trust, and business practices. In 2025 and beyond, companies that prioritise transparency, fairness, and accountability in AI development will gain a competitive edge. Stricter policies, improved bias detection, and ethical AI frameworks will become industry standards, influencing how businesses innovate and engage with users.

Standardised Ethical Frameworks

Expect more uniform guidelines from EU or UK bodies, plus industry associations. SMEs might see checklists or certification programmes. Embracing them early ensures readiness.

Tech Tools for Auditable AI

Vendors increasingly offer “AI governance dashboards,” letting SMEs track model drift, data usage, or decision logs. This fosters quick identification of bias or compliance lapses.

Consumer Activism

Millennials and Gen Z in London or Dublin might actively boycott or criticise brands accused of unethical AI usage. Social media magnifies complaints. The brand that invests in transparency could garner loyalty from these digitally savvy demographics.

Actionable Steps for SMEs

- Define an AI Ethics Policy: Even a 1-page statement clarifying goals (fairness, transparency) sets the tone for staff.

- Audit Data: Check if your training data underrepresents certain counties in Ireland or minority groups in the UK. Expand or correct as needed.

- Set a Review Mechanism: Periodically test outputs for bias or user dissatisfaction. Document your findings.

- Disclose: On your site, mention you use AI in certain processes, how you handle data, and user rights to human review.

- Collaborate: Seek input from local ethics labs or data science experts. Possibly sponsor or attend relevant workshops in Northern Ireland or the UK.

“A structured approach—policy, data checks, disclaimers—ensures SMEs skip pitfalls and harness AI responsibly for real, lasting benefits,” concludes Ciaran Connolly.

A Blueprint for Responsible AI in Ireland, NI, and the UK

Ethical AI usage isn’t a mere afterthought for businesses across Ireland, Northern Ireland, and the UK—it’s central to future competitiveness and trust. By identifying bias in data, implementing transparency for decisions, and abiding by privacy and fairness guidelines, you align AI solutions with the community’s best interests. This fosters a brand identity steeped in E-E-A-T and mitigates legal or reputational risks that come with rushed, unexamined AI deployments.

As regulators refine laws and consumers become more vocal, early adopters of ethical AI frameworks hold a distinct advantage. By building robust internal policies, forging local partnerships, and championing responsible innovation, your brand can lead the pack—demonstrating not only technical savvy but unwavering commitment to the people AI aims to serve.