Data Quality 101: The Hidden Key to Successful AI Initiatives

Table of Contents

When organisations embark on AI initiatives, they often focus on selecting the right tools, hiring data scientists, or designing sophisticated models. Yet, a critical—and sometimes overlooked—factor can make or break these projects: data quality. Even the most advanced algorithms yield misleading outputs if the input data is incomplete, inconsistent, or laced with errors. In 2025, with AI adoption soaring across industries, the principle “garbage in, garbage out” rings truer than ever.

This article delves into why data quality is the hidden linchpin of AI success, how to assess and improve your data readiness, and how a robust data management strategy underpins more accurate, trustworthy machine learning. If your AI models have underperformed or your staff mistrust AI outputs, data quality might be the root cause.

“You can’t expect AI to produce gold from messy data. Ensuring data accuracy, consistency, and relevance is the cornerstone of credible AI insights,” notes Ciaran Connolly, Director of ProfileTree.

Why Data Quality Is Crucial for AI

Data is the backbone of any AI system, and its quality directly impacts the accuracy, reliability, and effectiveness of AI-driven decisions. Poor data—whether incomplete, inconsistent, or biased—can lead to flawed insights, automation errors, and costly business mistakes. High-quality data, on the other hand, ensures that AI models operate efficiently, delivering meaningful results that drive innovation and growth. Investing in data accuracy, consistency, and governance is essential for businesses looking to harness AI’s full potential.

Impact on Model Accuracy

Machine learning algorithms learn patterns from historical data. If that dataset includes duplicates, missing fields, or outdated records, the model forms flawed correlations. Predictions might be off or skewed, eroding user trust. Essentially, poor data quality translates to low predictive reliability.

Resource Waste

Cleaning data after the AI model is built can be more time-consuming and expensive than preparing data in the first place. Repeated re-training or manual corrections hamper your project’s cost-effectiveness. Good data hygiene from the start ensures an efficient pipeline.

Risk of Bias and Ethical Issues

Imbalanced or unrepresentative data can embed biases. For instance, if a recruitment AI is trained on data lacking certain demographics, it may systematically disadvantage them. High-quality, diverse data sets reduce such ethical pitfalls, ensuring fairer outcomes.

Building Trust Among Staff

If employees notice the AI system making bizarre errors or ignoring crucial exceptions, they quickly lose confidence. Demonstrating a commitment to robust data governance—like verifying data sources, regular audits—helps staff trust AI-driven decisions.

Key Dimensions of Data Quality

Ensuring high-quality data requires more than just accuracy—it involves multiple dimensions that collectively determine its reliability for AI applications. Key factors such as completeness, consistency, timeliness, and relevance all play a crucial role in shaping effective AI-driven insights. By understanding these dimensions, businesses can proactively refine their data, reducing errors and enhancing AI performance. A strong data foundation is essential for maximising AI’s potential and driving meaningful business outcomes.

Accuracy

Reflects how well data matches real-world facts. For instance, are customer addresses current? Are product prices correct? Inaccuracies arise from manual entry errors, old records, or miscommunication between departments.

Completeness

Are all necessary attributes present? If your AI model needs location, date, and purchase history, missing any field severely limits analytics. For example, a half-filled product catalogue might hamper a recommendation engine’s ability to suggest relevant items.

Consistency

Do multiple systems or records show the same values for the same entity? Inconsistencies can appear if one database lists a client’s name as “John Smith” while another has “Jon Smyth.” AI might treat them as separate persons, yielding duplications or confusion.

Timeliness

Data needs to be up to date. Outdated data leads to irrelevant insights—like targeting ex-customers who moved on, or using last quarter’s inventory levels for dynamic pricing. Real-time or near-real-time data feeds can drastically enhance AI’s reliability.

Relevancy

Not all data is valuable for a given AI model. Overloading the system with irrelevant fields can cause noise. A sales forecasting AI, for example, might not need employees’ shoe sizes. Achieving relevancy ensures the model focuses on truly impactful variables.

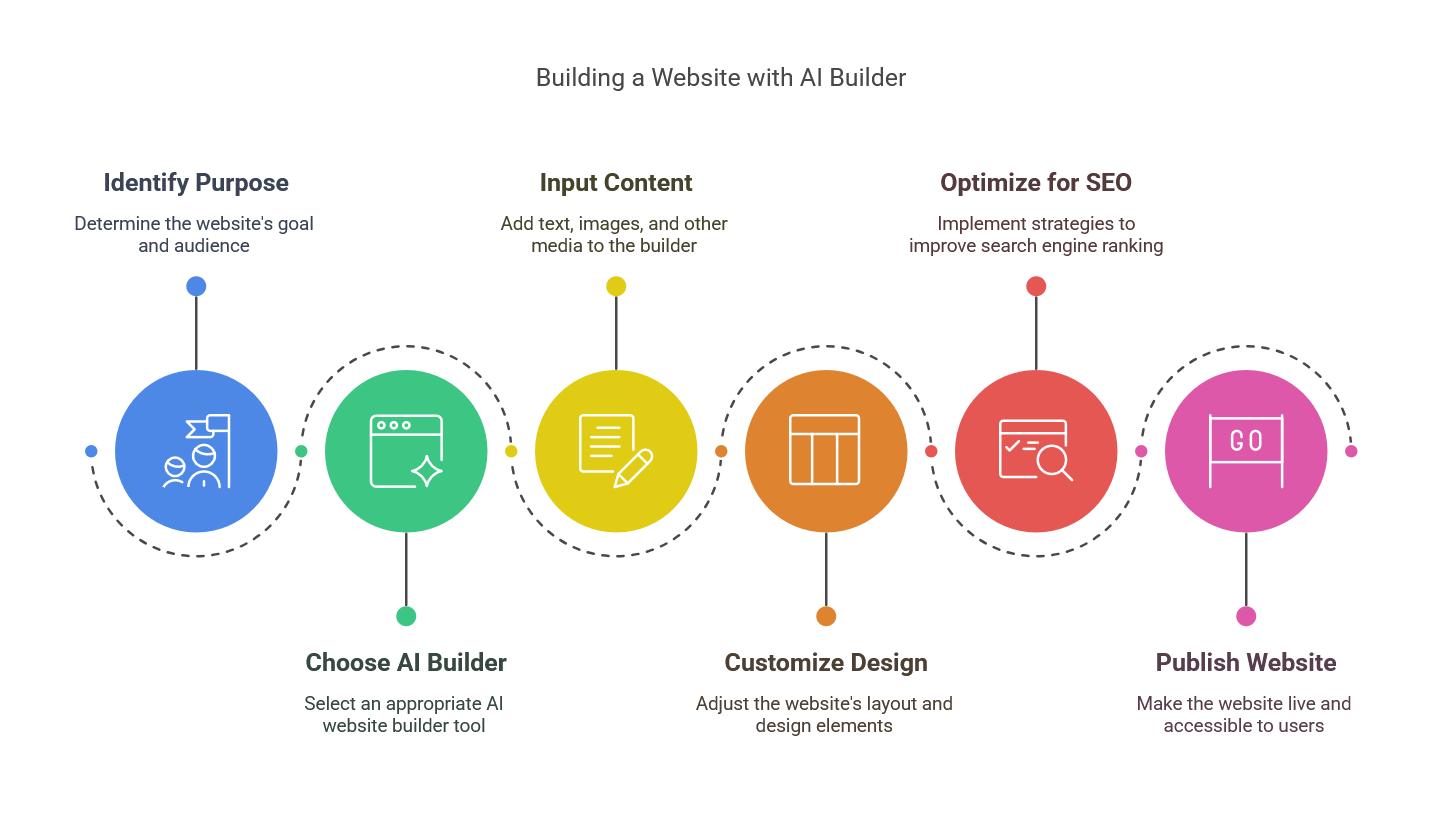

Steps to Assess Current Data Quality

Assessing your current data quality is a crucial first step in ensuring your AI initiatives are built on a solid foundation. This process involves systematically evaluating your data to identify gaps, inconsistencies, or errors that could impact AI performance. Key steps include auditing data sources, checking for completeness and accuracy, identifying duplicate or outdated records, and ensuring consistency across systems. By implementing a structured assessment, businesses can pinpoint areas for improvement and develop strategies to enhance data quality for more reliable AI outcomes.

Data Inventory

Map out every data source, from CRM logs and ERP systems to social media analytics or spreadsheet trackers. Clarify owners, typical data formats, update frequencies, and usage contexts. This inventory reveals potential overlaps or blind spots.

Conduct a Data Profiling Exercise

Profiling tools or scripts examine data sets for common anomalies—missing values, out-of-range values, weird outliers. Summaries indicate how many fields are incomplete, how many duplicates exist, or whether certain categories are overrepresented.

Gather User Feedback

Ask employees dealing with data daily about frustrations. They might highlight recurring issues (“Customer phone numbers often get stored incorrectly,” “We have hundreds of unlabelled product variations,” etc.). These on-the-ground insights are invaluable.

Sample Testing

Select random records and manually cross-check them against source documents or reality. Spot-checking small subsets can expose major structural problems—like entire columns systematically misaligned.

Laying the Foundation: Data Governance and Management

Strong data governance is key to AI success, providing clear policies for data collection, security, and usage. Standardised practices improve accuracy, ensure compliance, and build trust in data-driven decisions. By maintaining consistency and integrity, businesses can maximise AI’s potential while minimising risks.

Data Governance Framework

Define roles and policies:

- Data Owners: Typically department leads who ensure data within their domain is accurate and up to date.

- Data Stewards: Oversee day-to-day quality checks, maintain metadata, and facilitate cross-team alignment.

- Data Standards: Outline naming conventions, formatting rules, version control, and security protocols (like GDPR compliance).

Centralised vs. Federated Approaches

Smaller firms often adopt a central data repository (data warehouse or data lake). Larger ones might keep departmental systems but enforce standard APIs or schemas. The key is ensuring consistent definitions—like “customer ID” always meaning the same thing across systems.

Data Cleaning Processes

Set up systematic cleaning steps. E.g.:

- De-duplication: Merge multiple records referring to the same entity.

- Standardisation: Convert addresses to a uniform format.

- Validation: Checking if numeric fields fall within plausible ranges.

- Enrichment: Filling in missing fields from reliable external sources if needed.

Regularly schedule these routines or incorporate them into real-time data ingestion pipelines.

Data Preparation for AI Modelling

Effective AI modelling starts with well-prepared data. This process involves cleaning, organising, and transforming raw data into a structured format suitable for AI algorithms. Key steps include handling missing values, removing duplicates, normalising data, and ensuring consistency across datasets. Proper data preparation enhances model accuracy, reduces bias, and improves overall AI performance. By investing in this crucial step, businesses can ensure their AI systems generate reliable and actionable insights.

Feature Selection

Collaborate with domain experts to determine which fields matter for the AI model’s objective. For instance, a churn prediction model might require purchase frequency, average order value, last login date, but not your employees’ birthdays. Minimising irrelevant data helps performance and interpretability.

Data Transformation

Often data needs reformatting. For instance, converting categorical text fields (like “region: North/South/East”) into numeric encodings. AI also benefits from normalised or scaled numerical fields to avoid overshadowing smaller ranges. Tools like Python’s Pandas, R, or specialised ETL solutions handle these transformations systematically.

Addressing Class Imbalances

If you’re predicting a rare event (like fraudulent transactions), your data might have high imbalance (99% legitimate, 1% fraud). This can skew many AI models. Strategies like oversampling, undersampling, or synthetic data generation (e.g., SMOTE) rectify the imbalance, improving the model’s detection ability.

Splitting Training/Validation/Test Sets

Properly dividing data ensures the model learns patterns robustly. Typically, 60-70% training, 15-20% validation, 15-20% test, though SMEs might vary based on dataset size. The key is preventing data leakage where the model sees test data in training, artificially boosting performance metrics.

Ongoing Monitoring and Maintenance of Data Quality

Maintaining high-quality data is an ongoing process, not a one-time task. Regular monitoring helps detect inconsistencies, errors, or outdated information that could impact AI performance. Implementing automated data validation, routine audits, and real-time anomaly detection ensures data remains accurate and reliable. By continuously refining data quality, businesses can sustain AI efficiency, improve decision-making, and adapt to evolving data needs.

Real-Time Error Detection

Implement scripts or monitoring dashboards that flag suspicious new entries or spikes in missing values. Quick detection of anomalies, like a misconfigured form generating empty fields, prevents corrupt data from polluting the entire dataset.

Periodic Audits and Data Retention

Schedule quarterly or biannual audits. Remove obsolete records (like old leads not engaged for years) if they no longer serve the model’s scope. Keep versioned backups so you can roll back if new transformations introduce errors.

Updating the Model as Data Evolves

A model trained on last year’s data may degrade if user behaviour or product lines shift. Continuous or scheduled retraining keeps predictions current. For major business pivots—like entering a new market or launching a new product range—recheck the dataset’s structure and relevant features.

“Data is never ‘set and forget.’ It’s a living asset. Continuous refinement keeps AI aligned with reality,” reminds Ciaran Connolly.

Balancing Data Access and Security

Balancing data access and security is essential for leveraging AI while protecting sensitive information. Businesses must ensure that employees have the right level of access to data without compromising privacy or compliance. Implementing role-based permissions, encryption, and regular security audits helps safeguard data while enabling efficient AI-driven decision-making. Striking this balance fosters innovation while maintaining trust and regulatory compliance.

Role-Based Access Controls

Not all employees need full read/write access to entire data sets. Restrict sensitive fields—like personal customer details—to authorised personnel. This approach mitigates accidental data corruption and reduces privacy risks.

Compliance with Regulations

Under GDPR or other frameworks, you must ensure lawful bases for storing personal data, fulfilling requests for data deletion or correction, and promptly reporting breaches if they occur. AI projects can inadvertently expand data usage, so robust compliance is crucial.

Secure Data Pipelines

Encrypt data in transit and at rest. If your AI or ETL processes involve external services, confirm they meet the same security standards. Minimising the risk of hacking or data leaks is fundamental to preserving trust in your organisation.

Building a Data-Centric Culture

Creating a data-centric culture ensures that data quality becomes a shared responsibility across the organisation. Encouraging employees to prioritise data accuracy, follow best practices, and leverage insights for decision-making strengthens AI outcomes. Providing training, clear guidelines, and leadership support helps embed data-driven thinking into daily operations. When data is valued as a strategic asset, businesses can drive better AI performance and long-term success.

Leadership Advocacy

Executives should champion the phrase “quality data is everyone’s responsibility.” If data governance is solely an IT function, departmental staff might bypass processes or neglect proper data entry, undermining the entire initiative.

Rewarding Good Data Practices

If staff ensure thorough, accurate input, reduce duplicates, or identify data discrepancies, recognise their efforts. This fosters pride in data hygiene. Conversely, if employees cause repeated errors, address them in performance reviews.

Staff Training on Data Literacy

Many data quality issues arise from misunderstandings—like input format or the significance of certain fields. Provide short training modules so staff understand the why behind data guidelines. This invests them in the bigger AI picture, reinforcing that each record entry matters.

Future Outlook: Emergence of DataOps and Advanced Tools

The future of data management is evolving with the rise of DataOps and advanced AI-driven tools. DataOps streamlines data workflows, ensuring continuous integration, quality, and accessibility for AI applications. Emerging technologies like automated data cleansing, real-time monitoring, and AI-powered analytics are revolutionising how businesses manage and utilise data. As these innovations grow, companies that adopt agile, automated data practices will gain a competitive edge in AI-driven decision-making.

DataOps for Streamlined Development

A rising trend is DataOps—applying DevOps-like principles to data pipelines. Automated testing, continuous integration, and real-time monitoring ensure that data transformations remain consistent and thoroughly verified. SMEs can adopt lightweight dataOps frameworks to gain a professional approach to data management.

AI-Powered Data Cleaning

Ironically, AI itself can help detect anomalies or fill in missing fields based on patterns. Some advanced solutions claim to automate large portions of data wrangling. However, oversee these processes carefully—AI guesses are never a substitute for verifying crucial, high-stakes data points.

Cross-Enterprise Data Ecosystems

Increasingly, businesses share data with partners or customers for collaborative AI insights. Ensuring data quality extends beyond your firewall. This “ecosystem approach” can yield bigger data sets and richer insights, but demands even stricter governance across multiple parties.

Elevating AI Potential Through Data Excellence

The best AI algorithms in the world stumble if fed with inaccurate, incomplete, or inconsistent data. In 2025, as more organisations rely on machine learning for critical decisions—like product recommendations, credit risk assessment, or supply chain optimisations—data quality is emerging as the true backbone of sustainable AI success.

By formalising data governance, implementing structured cleaning and validation routines, and cultivating a culture where staff see data as an asset to be nurtured, you unlock the full promise of AI. Clean, consistent data leads to more trustworthy insights, fosters staff confidence, and accelerates your ROI on advanced analytics. Remember, “garbage in, garbage out” remains a steadfast truth in AI—so invest in data quality from day one and watch your AI initiatives flourish.