Navigating AI and GDPR in Ireland: 6 Pitfalls to Avoid

Table of Contents

Navigating AI and GDPR in Ireland presents unique challenges for businesses looking to harness the power of AI while ensuring compliance with strict data protection regulations. Whether you’re an SME using a chatbot or a multinational with an R&D presence in Dublin, understanding how to manage personal data responsibly is crucial. This article highlights 6 common pitfalls Irish businesses face when deploying AI under GDPR and provides practical guidance to ensure legal compliance and maintain user trust.

“GDPR compliance is not optional. As businesses integrate AI, they must ensure robust safeguards to protect user data or face regulatory and reputational fallout,” warns Ciaran Connolly, Director of ProfileTree.

Pitfall 1: Collecting Personal Data Without Proper Consent

Pitfall 1 highlights the risk of collecting personal data without proper consent. Many AI tools, like chatbots, inadvertently gather sensitive information, which can lead to GDPR violations if consent isn’t explicitly obtained.

Overview

Many AI-driven solutions—especially chatbots—archive user inputs. If these inputs include names, emails, or personal details, you’re collecting personal data under GDPR. Some SMEs overlook requesting explicit consent or bury the data usage statement in a rarely read policy.

How to Avoid

- Prominent Consent Notice: On your chatbot or web form, display a concise statement: “We use your data to improve chatbot accuracy. By continuing, you agree to our privacy policy.”

- Granular Consent: If data is used for multiple purposes (analytics, marketing), separate checkboxes might be needed.

- Privacy Policy: Clear, plain-language detailing how AI processes data, how long it’s stored, who access it, and how it’s anonymised.

Pitfall 2: Storing Data Indefinitely in AI Systems

Pitfall 2 addresses the danger of storing data indefinitely in AI systems. Retaining personal data for longer than necessary can lead to GDPR non-compliance and potential privacy risks.

Overview

GDPR demands data minimisation—keeping personal data only as long as needed. AI models or logs can accumulate huge volumes of user data over indefinite periods. If your ChatGPT integration or custom ML system retains every user conversation, you risk GDPR violations.

How to Avoid

- Auto-Deletion or Anonymisation: Implement a retention policy (e.g., purge personal data after 30 days). Tools that anonymise transcripts and remove user identifiers are recommended.

- Audit Trails: Keep track of how data flows from the chatbot to your database or from analytics logs. Ensure no indefinite backup keeps personal data.

- User Controls: Give users the right to request data deletion. If your AI logs contain personal information, remove or anonymise them on request.

Pitfall 3: Failing to Assess Third-Party AI Tools

Pitfall 3 focuses on the importance of assessing third-party AI tools. Relying on external vendors without ensuring their GDPR compliance can expose businesses to significant data protection risks.

Overview

SMEs often rely on SaaS AI solutions hosted abroad. Yet GDPR restricts transferring personal data outside the EEA unless specific safeguards (like standard contractual clauses or the EU-US Data Privacy Framework) are in place.

How to Avoid

- Vendor Check: Assess each AI vendor’s data protection stance. If they’re US-based, do they comply with recognized data transfer mechanisms? Are they identified as a processor under GDPR?

- Contractual Protections: Sign a data processing agreement specifying responsibilities, including sub-processor disclosures.

- Due Diligence: Investigate the vendor’s track record. Google or Microsoft might be safer bets with established compliance structures, while smaller unknown providers might be riskier.

“Selecting an AI vendor is about more than features. GDPR compliance is crucial—particularly for sensitive data that chatbots or ML tools might handle,” says Ciaran Connolly.

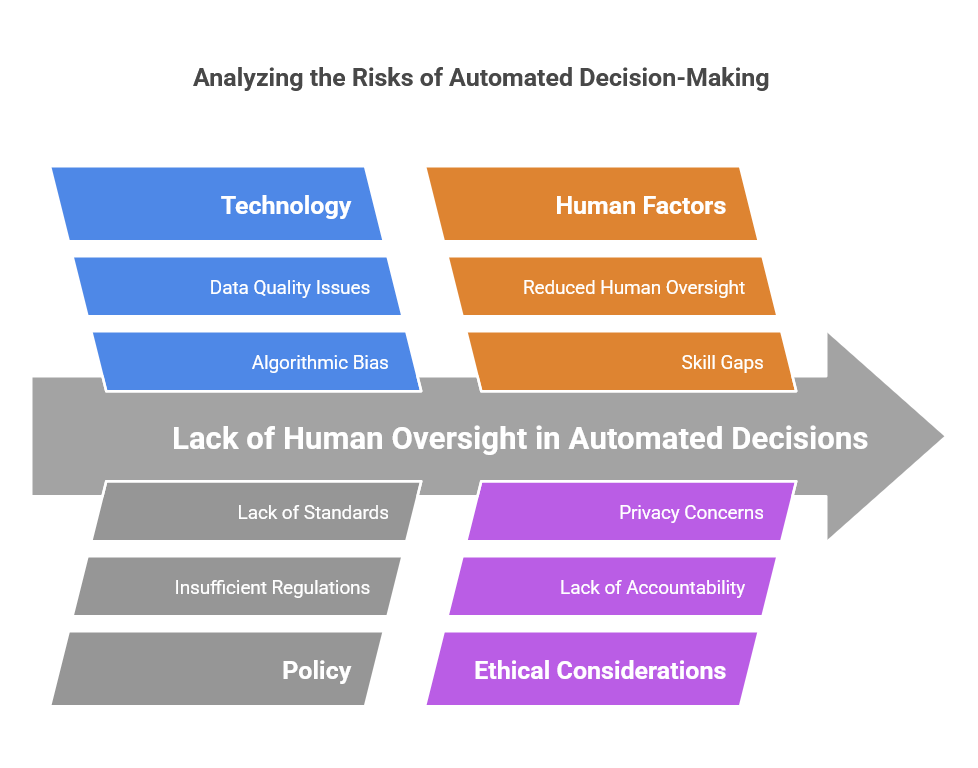

Pitfall 4: Lack of Human Oversight in Automated Decisions

Pitfall 4 highlights the risks of lacking human oversight in automated decisions. Fully automating processes without human intervention can violate GDPR’s requirements for transparency and user rights.

Overview

GDPR’s Article 22 addresses automated decision-making with legal or significant effects. Fully automating processes (like credit approval and job application screening) without a human in the loop could violate user rights.

How to Avoid

- Human-in-the-Loop: For decisions that heavily impact individuals (loans, hiring, insurance claims), keep a review step by a staff member. Offer users an appeal or request for manual review.

- Explainability: Document how your AI system reaches certain decisions. If a user challenges it, you can provide a rationale.

- Clear Notice: Disclose that an AI system assists in making decisions and that they can request manual intervention.

Pitfall 5: Not Conducting DPIAs (Data Protection Impact Assessments)

Pitfall 5 underscores the importance of conducting Data Protection Impact Assessments (DPIAs). Failing to assess the risks of AI systems can lead to non-compliance and jeopardize user privacy.

Overview

A DPIA is mandatory if processing is “likely to result in a high risk to the rights and freedoms of individuals.” AI, especially if it’s complex or handles sensitive data, often meets that threshold. Many Irish companies skip it, risking non-compliance.

How to Avoid

- Identify High-Risk Scenarios: If your AI uses personal data, especially health, financial, or location data, do a DPIA.

- Structure Your DPIA by Outlining data flow, potential risks, and mitigating measures (like encryption and access controls).

- Seek Guidance: The Irish Data Protection Commission has resources on DPIAs. If uncertain, consult a data protection officer or legal expert.

Pitfall 6: Overreliance on AI for Sensitive Advice

Pitfall 6 warns against overrelying on AI for sensitive advice. Using AI for legal, medical, or financial guidance without proper disclaimers can mislead users and breach GDPR’s fairness principles.

Overview

Generative AI tools can produce content that appears authoritative but might be inaccurate or incomplete. If a site uses AI to offer legal, medical, or financial advice without disclaimers, it could mislead users—breaching consumer protection or GDPR’s fairness principles if personal data factors in.

How to Avoid

- Disclaimers: If the AI chatbot suggests sensitive topics, disclaim it as general info, not professional advice.

- Limit the AI’s Scope: For example, restrict healthcare chatbots to general wellness tips and instruct them to direct serious cases to a real professional.

- Human Review: If user queries exceed a threshold, escalate to a qualified staffer.

“Ethical usage of AI is pivotal. Encouraging disclaimers and human checks ensures we don’t undermine consumer trust or breach data ethics,” says Ciaran Connolly.

Strategies to Maintain AI–GDPR Compliance

This section outlines effective strategies to maintain AI-GDPR compliance, focusing on data minimisation, transparency, user control, and regular audits to ensure ongoing compliance.

Minimising Personal Data

If AI usage doesn’t strictly require personal data, remove or anonymise it. E.g., a chatbot might handle generic shipping queries without storing user names. The less personal data you collect, the fewer GDPR headaches you face.

Transparent User Communication

Use accessible language. If the AI uses user data to improve responses, say, “We record conversation content to improve future answers, never storing your info beyond 30 days.” Honest disclaimers foster trust and meet GDPR’s transparency requirement.

Access Controls and Logging

Ensure only authorized staff can access the AI’s logs. Implement role-based permissions in your AI interface or aggregator. Keep logs to track who accessed data or changed the knowledge base. This fosters accountability if a data breach or compliance check arises.

Regular Audits

Set a quarterly or biannual schedule to re-check compliance. As you expand AI’s scope or add new features, reevaluate if your data usage changes. If so, you might need a new DPIA or updated privacy policy.

Future Developments in Irish AI Regulation

This section explores future developments in Irish AI regulation, highlighting potential changes in compliance requirements, including the EU AI Act and evolving guidelines from the Irish Data Protection Commission.

The EU AI Act

Though separate from GDPR, the proposed EU AI Act introduces extra rules for “high-risk” AI systems, e.g., in recruitment, healthcare, or credit scoring. Irish businesses dealing with these categories must watch legislative progress. Stricter obligations might include human oversight, data quality checks, and potential licensing.

Evolving DPC Guidance

The Irish Data Protection Commission (DPC) may release updated guidelines or enforcement stances addressing generative AI. This could clarify whether certain prompt logs are considered personal data or how to handle ephemeral data storage.

“We expect more clarity from EU-level proposals—like the AI Act. Irish SMEs using AI in high-impact areas need to plan for compliance expansions,” says Ciaran Connolly.

Case Study: A Dublin-Based Legal Startup

This case study examines how a Dublin-based legal startup successfully navigated GDPR compliance while using AI, demonstrating practical steps to mitigate risks and ensure data protection.

Scenario

A small legal firm uses a GPT-based summariser for client case files. They feed partial client data to generate quick briefs. They suspect potential GDPR pitfalls if the data is personal or reveals sensitive details (medical, criminal history, etc.).

Compliance Steps

- They performed a DPIA, identifying the risk of storing personal data on remote servers.

- They configured ChatGPT usage with anonymized references (client code or case ID, no real names).

- They updated privacy policies and disclaimers for staff usage.

- They store logs on an EU-based server with 14-day auto-deletion.

Outcome

No data breaches or user complaints. The firm’s staff cuts drafting time by 40%, focusing on deeper legal strategy. The firm remains fully GDPR-compliant, with no regulatory flags.

Safeguarding User Data While Harnessing AI in Ireland

AI solutions—chatbots, generative text, advanced analytics—enable Irish businesses to streamline operations and improve user experiences. Yet, GDPR requires a careful approach to data collection, storage, and automated decision-making. By avoiding the 6 pitfalls outlined here—lack of consent, indefinite data storage, ignoring vendor compliance, lacking DPIAs, purely automated sensitive decisions, and irresponsible use of AI for critical advice—companies can remain on the right side of regulators and user trust.

Ultimately, AI adoption thrives when privacy is woven into development. Transparent user notices, robust anonymisation, and optional human review keep AI projects ethically sound and legally safe. As AI usage in Ireland intensifies, staying vigilant about GDPR compliance fosters user confidence, ensuring your business reaps the transformative benefits of AI without risking heavy fines or reputational damage.

Conclusion: Navigating AI and GDPR in Ireland

As AI adoption continues to grow across Irish businesses, the importance of ensuring compliance with GDPR cannot be overstated. Navigating the complexities of data privacy while leveraging AI technologies requires a well-structured approach. By avoiding the six common pitfalls outlined in this article, businesses can safeguard their user data, maintain compliance with GDPR, and protect their reputation. A proactive strategy that includes precise consent mechanisms, transparent data policies, regular audits, and a commitment to human oversight in automated decisions will mitigate the risks associated with AI integration. As regulatory frameworks evolve, staying ahead of the curve will ensure businesses can harness AI’s potential while maintaining the trust and confidence of their users.